We’ll admit, we’re a little obsessed with incremental lift testing here at YouAppi. But in our opinion, any strategy that can help us prove the value of retargeting and also understand how best to spend our next ad dollar should be something to obsess about. In our ongoing work to reach (and beat) the LTV and retention goals of our partners, we’ve learned that incrementality testing is one of the most valuable tools in our programmatic media buying toolbox.

If your brand is considering launching an incrementality test, or considering retargeting — this incrementality Q&A is for you.

How do you test for incrementality?

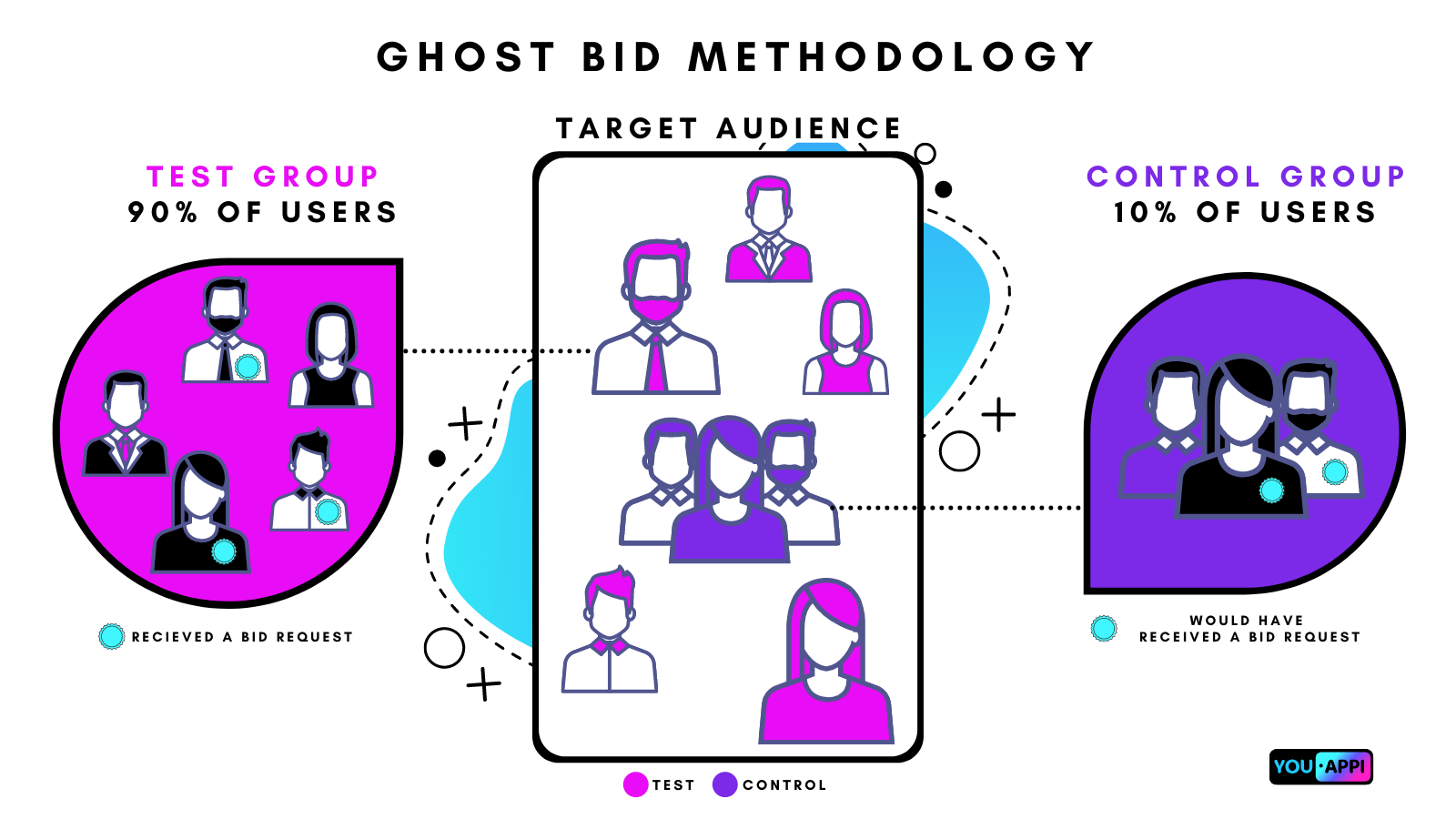

There are several different methods for testing incrementality. Because we are a DSP and have access to bid data through the exchanges, we test for incrementality at the bid request level. This is known as ghost bidding incrementality testing.

|

Unlike other incrementality testing methodologies, ghost bidding does not serve real ads to a test group. Instead, it maximizes the use of programmatic technology. How? By placing bids on users in both the test and control groups in the real time bidding (RTB) exchanges. This also controls for noisy data — e.g. meaningless information that comes from the unexposed populations of the test and control group

For more information about the ghost bidding methodology as well as how it compares to other incremental lift testing methodologies, read our article on how to run an incrementality test here.

How long do you recommend running an incrementality test for?

At YouAppi, we recommend running an incrementality test for at least one month. This should take place at least one month or more after launching a campaign. Generally, we run incrementality tests between week 4 and week 8 of a campaign. Waiting 4 weeks to launch an incremental lift test gives us time to gather data. It also gives us time to scale up a campaign to a point when the media budget, user base and KPIs have stabilized. Running an uplift test earlier than this runs the risk of evaluating data that’s still in flux.

While you can run an incrementality test longer than four weeks, there are pros and cons. One con is the weekly removal of 10% of your user base to be used in your control group. Every week your incrementality test is running, a random selection of 10% of your user base are not going to see an ad or be touched by your retargeting efforts. This can limit your campaign reach and also increase the price of your bids.

For more information about the use of randomized controlled trials (RCT) in incrementality testing, read our article about spending your ad dollars more effectively with incrementality testing here.

To minimize this risk, we recommend our partners undertake an incrementality test four weeks into a campaign and, after that, periodically or seasonally. For example, your brand could test for incremental lift once a quarter, twice a year or just once a year. Another option is to run incrementality tests when your brand is doing a lot of A/B testing, adding new partners, or preparing for a major launch or new promotion.

Is the test always on?

Yes, for at least four weeks the incrementality test will be on in the background of your retargeting campaign. This means a random selection of 10% of your users in a control group will not see an ad.

How frequently do you provide reporting?

Depending on our partner’s KPIs — either weekly, monthly or both. At YouAppi, we can provide either a weekly “co-horted” report and/or a monthly report.

The week-by-week or “cohorted” report tracks users marked with a bid on a weekly basis. So, all users tagged within a specific anchor — e.g. within a specific week — are tracked for up to a month. We collect data on whether they made a transaction, or churned on Day 1 to Day 30. So, if a user re-opened and re-engaged with a game app on January 1st, but didn’t make a purchase until January 25th, then the purchase is still associated with the January 1st anchor period. This is often the type of report requested by gaming clients that want to understand how their retargeting is driving revenue on a weekly basis.

On the other hand, our monthly report captures all information about tagged users and their behavior within a month period versus a week-by-week split. We often use monthly reports for subscription-based apps that are not concerned with weekly revenue but want to understand their uplift in total conversions.

Both the weekly and monthly reports can be organized by campaign and segment for a more detailed understanding of what’s driving performance in a programmatic campaign.

What value can I get from running an incrementality test?

Incrementality testing can help you understand the value of your retargeting efforts; on an overall level and also on a more granular segment and campaign level.

As an example, let’s say your brand is running retargeting campaigns with multiple DSPs. Each campaign with each DSP segments and targets users differently. One campaign, for example, could be focused on targeting iOS users and driving IAP. Another campaign is focused on reaching a certain LTV goal by targeting a certain geo. With incrementality testing you can compare the relative uplift and value of different targeting strategies. This, in turn, can help your team prioritize certain strategies and spend your marketing budget more efficiently.

Other examples of ways we’ve used incrementality testing in the past to drive value for our partners include A/B testing different inactivity windows, testing the uplift value of segmenting and targeting paying users vs. non-paying users, and testing the incremental lift of different conversion levels within the user flow.

Did incrementality testing change at all post-IDFA?

Since we test for incrementality on the bid request level, the short answer is ‘no’.

As mentioned above, we test incrementality on the bid request level. Therefore, we have the ability to tag users with bids whether we have their IDFA or not. If we were testing for incrementality using attributed data from users on the IDFA level, then the deprecation of the IDFA would have a major effect on our testing. However, since we’re testing on unattributed data at the bid request level — e.g. requesting a bid according to the users in the segment and not according to whether the impression was won or not — our incrementality testing has been largely agnostic to iOS 14.5+.

Takeaways

- How do you test for incrementality? Ghost bidding methodology.

- How long do you recommend running an incrementality test for? For one month at least one month into a campaign.

- Is the test always on? Yes.

- How frequently do you provide reporting? Depending on our partner’s goals — either weekly, monthly or both.

- What value can I get from an incrementality test? It can help you understand the value of your retargeting and spend your ad dollars more effectively.

- Did incrementality testing change at all post-IDFA? Since we test for incrementality on the bid request level, no.

Test your retargeting campaigns for incremental lift

Understand how best to spend your next ad dollar by scheduling meeting with our team.